Test Environment Management Tools Compared

Five years ago, if you were asked to recommend a “Test Environment Management” platforms you might have struggled. In fact, you might have struggle to identify one, particularly if you would have considered your own DevTest teams’ behaviour. Lot of disruption, delays, misconfiguration and the inevitable use of Spreadsheets for tracking project bookings, MS Visio document for system information capture, Email for Reporting and perhaps if you were lucky, some test automation for platform health checks. Not exactly elegant nor scalable but undoubtedly better than complete chaos.

However, things have somewhat changed and with a raft of solutions now claiming to solve this problem, The Last Frontier of the SDLC, the question now is not “what” but “which” platform will meet our needs and address one of the SDLC’s biggest “Waste Areas”?

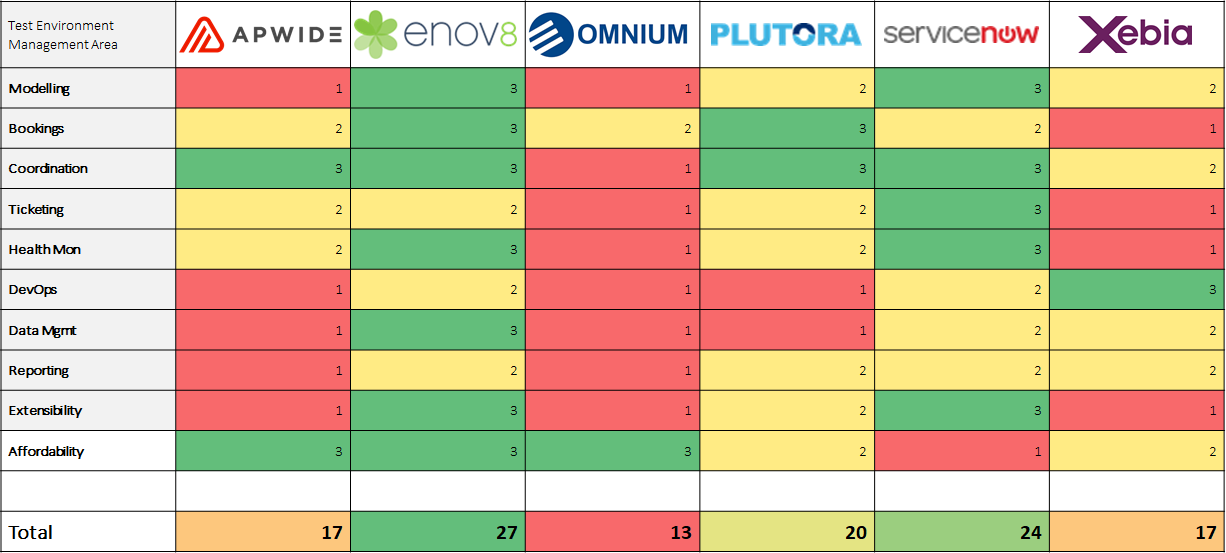

At TEM Dot we decided to compare six of the biggest players in this space across 10 key areas:

Key TEM Vendors

Key TEM Performance Areas

- Modelling

- Booking Management

- Coordination

- Ticketing

- Health Monitoring

- Automation & DevOps

- Data Management

- Reporting

- Extensibility

- Affordability

Test Environment Management Tool Scoring

Area-1 Environment Modelling

The ability to know what your Environments and Systems look like.

Historically think Visio or your CMDB (if you have one).

Gold Medal Position:

Enov8 & ServiceNow both offer powerful Visual CMDBs & Component / discovery mapping.

Silver:

Plutora & Xebia offer modelling capability.

Bronze:

Apwide & Omnium modelling is achieved via tabular forms.

Area-2 Booking & Contention Management

The ability to capture environment requirements & manage contention on Environments & Systems.

Historically think Email & an attached Word document.

Gold Medal Position:

Enov8 & Plutora offer advanced booking & contention analysis methods.

Silver:

Apwide, ServiceNow offer booking requests (ref ticketing) capability.

Bronze:

Xebia has no obvious environment booking or contention mechanism.

Area-3 Environment Coordination

Tracking Events & Release activity across space (Environments) & time (Month, Year etc).

Historically think a MS Project Plans.

Gold Medal Position:

Apwide, Enov8, Plutora, ServiceNow offer Environment & Release based calendaring.

Note: Enov8 & Plutora offer Runsheets /Implementation Plans (respectively).

Service Now offers checklists.

Silver:

Xebia – Calendaring is release centric (opposed to environment centric).

Bronze:

Omnium (limited capability identified).

Area-4 Ticketing

Ticketing / IT Service Management to capture Environment Change Requests Incidents etc.

Historically think Remedy.

Gold Medal Position:

ServiceNow has advanced ITSM methods.

Silver:

Apwide (using Jira), Enov8, Plutora have solid Ticketing / Requests functionality.

Bronze:

Omnium & Xebia dependent on other tools.

Area-5 Health Monitoring

The ability check Systems or Components or Interfaces are up.

Historically think Test Automation scripting or your server monitoring solutions like Zabbix.

Gold Medal Position:

Enov8 & ServiceNow offer integration methods & native agents to monitor health.

Silver

Apwide & Plutora have APIs that logically allow system health updates.

Bronze:

Omnium & Xebia don’t play in this space.

Area-6 Automation & DevOps

The ability to automate key Environment Operations using code.

Gold Medal Position:

Xebia is a powerful release orchestrator (its primary purpose).

Silver:

ServiceNow Orchestration automates IT & Business Processes.

Enov8 offers “agnostic” Scripting Hub (Orchestration Manager), Pipelines, Playbook, Webhooks & URL Triggers.

Bronze:

Apwide integration is very simple but can be achieved with Get/Post methods.

Plutora needs other tools to automate/integrate properly (like Dell Boomi). The SaaS only option can also be limiting.

Omnium integrates with other tools to automate.

Area-7 Data Management

The ability to manage one’s data e.g. Extract Data, Masking data, Provisioning Data etc.

Think Compuware File-Aid.

Gold Medal Position:

Enov8 seems to be the only solution for Test Data. Enov8 offers support for Data (PII/Risk) Profiling & Masking and Data Bookings through “Data Compliance Suite”. Enov8’s Visual Orchestrate can also be used to schedule other Data Tools.

Silver:

Xebia & ServiceNow capabilities are limited but they can leverage their orchestrators and call other tools.

Bronze:

Apwide, Omnium & Plutora don’t appear to play in this space.

Area-8 Reporting

The ability to get & share insights about your Environments.

Historically think drawing pretty pictures & graphs with PowerPoint.

Gold Medal Position:

A lot of the tools have solid reporting; however, focus is Environments: No Gold Medal yet.

Silver:

Enov8 seem to have best out-of-box Environment dashboards. Needs simpler customization.

ServiceNow Env Dashboard are limited but ultimately extensible.

Xebia have some solid report, but more deployment focused.

Plutora is reliant on a new “Tableau” extension. Getting there but seems disjoint.

Bronze:

Apwide leverages Jira’s native capabilities.

Omnium approach is somewhat “download/export” focused.

Area-9 Extensibility

The ability to have the product do whatever you want.

Think of Salesforce or SAP.

Gold Medal Position:

ServiceNow – An Extensible Engine. You can use it to build anything.

Enov8 – An “Object Oriented” Extensible Engine. You can use it to build anything.

Silver:

Plutora has broad customization features so you can “partially” alter its behaviour.

Bronze:

Xebia allows customization of your processes but not the platform itself.

With Apwide & Omnium you basically get what you get.

Area-10 Cost

The money ball question. And potentially the most important for some.

Gold Medal Position:

Low Cost of Entry – Apwide, Enov8 (Free Team Edition) & Omnium

Silver:

Medium – Plutora & Xebia

Bronze:

Expensive – ServiceNow (Just add another “0” for licensing & tailoring services)

The “Test Environment Management Tool” Score Card

Overall Test Environment Management Platform Rating

Final TEM Tool Positions

| Position | Player | Findings |

| #1 | Enov8 | Very much a Test Environment centric solution. |

| #2 | ServiceNow | An extensible ITSM solution, expensive but powerful. |

| #3 | Plutora | More focused on Release Planning. |

| #4 | Apwide | Simple booking tool that has its place at the table. |

| #4 | Xebia | More focused on Continuous Delivery |

| #5 | Omnium | Inexpensive and will be the right fit for some. |

Note: Scoring was limited to the ten key areas recognised by TEMDOT as the most important for successful Test Environment management. The scores do not reflect broader functionality i.e. functionality that may be deemed more important for your organization. If you feel there are inaccurate statements in this comparison or a tool missing, please reach out using our contact form.